|

Getting your Trinity Audio player ready...

|

In this digital age, cybersecurity has become essential to every organization’s operations and an integral part of our consciousness as individuals. With an ever-rising number of cyber threats and critical systems being hacked or found to be vulnerable (as we posted a few weeks ago in our blog), innovative approaches are needed to protect sensitive assets from malicious actors.

One of the latest advancements in the field has been to leverage artificial intelligence (AI), or the use of generative models. Using AI can be potentially helpful – not just for the CISO/CSO and the security team, but also for the system, network, and other teams across organizations.

Understanding Generative Artificial Intelligence

You may already know this but in case you don’t, here’s a quick primer; Generative Artificial intelligence, or GAI, refers to a subset of AI techniques that aim to create outputs, such as text or images, that mimic human-like responses. With advanced algorithms and training processes (that we will cover in later blog posts), GAI models have gained significant attention due to their ability to generate realistic and contextually-appropriate content.

Proprietary AI platforms, such as OpenAI and AI21Labs, have made remarkable progress in developing generative AI models like GPT-3. We saw school kids using it to write their homework and school teachers using it to check the kids’ homework; doctors are using it to obtain better and faster medical diagnoses and lawyers are using it to understand and prove precedent in cases. Obviously, there are a million other ways it’s being leveraged but here at XM Cyber, our security team tried out ChatGPT to solve certain Cybersecurity problems, and to our delight, it yielded excellent results.

Ways GenAI Can be Used in Cybersecurity

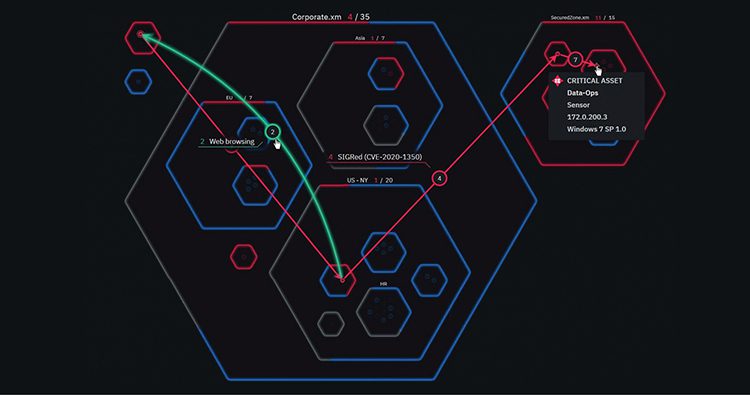

At XM Cyber, we are currently leveraging AI in multiple use cases for customers. For example, when our attack path analysis finds choke points, it recommends ways to remediate. But often, IT cannot perform said remediation, owing to many possible reasons. With the use of Gen AI, security teams can get the ability to negotiate with IT, by locating multiple alternative remediations that provide the same results.

At the same time, we are working towards having a dedicated Generative pre-trained transformers (GPT) neural network per customer – allowing the CISO and the security team to ask about their most risky entities and how to mitigate those risks.

Oh No! Houston, We Might Have a Problem…

However, utilizing proprietary AI platforms in cybersecurity products raises more than a few concerns. While these models bring immense potential for automating various tasks, like coding software or helping security teams mitigate security issues and even writing this blog post (!!!), they also present new challenges regarding privacy and security. Here we will share the privacy concerns we had to address during research we conducted and why we believe using open-source projects like NanoGPT and LlamaIndex or private solutions such as aleph-alpha is a better approach than using alternatives such as the famous OpenAI (and their ChatGPT) or AI21Labs, to ensure data privacy for our customers.

But to understand the privacy issue posed, let’s imagine a company; This company shares data with their chosen AI platform to solve a stubborn problem, like trying to fix or locate a certain bug that’s affecting performance. Since prompt data is stored, it can then be shared outside the organization, when other organizations use the tool to perform their own research – which is exactly what happened at Samsung a few months ago.

So telling AI about your cybersecurity issue and asking for a solution can result in the AI telling someone else about your problem. It might take a while to implement a solution, and until then, the AI will “chat” about your issue with everyone who asks for it. (And by the way, in case you were wondering, no, you can’t ask AI to “forget” what was asked.)

Data leakage is just one concern out of many. There are loads of other ways security can be negatively impacted using AI, such as data hallucination but we’ll delve into those in a different blog.

Preserving Privacy and Security with Dedicated Modules

To address concerns around privacy and leaked information, we started researching and building a private module that is trained internally and then run as a dedicated service per customer. Utilizing this isolation method eliminates any data leakage between customers and provides better answers in the long term, not just for the security team but for other teams and helps mitigate privacy and security risks. This means that we – and our users – can leverage the immense capabilities of AI, without the fear of it gossiping sensitive information to other users.

Can AI Replace Me?

Another thing we often found ourselves wondering was if AI would eventually replace us. I’m happy to report that over time it became more than evident that AI serves as more of a supportive tool, rather than the alternative, providing great feedback and helpful information – but not actually executing, or even providing, the whole answer for a given problem.

And so, our security team learned how to “drive” the system and give better instructions to other teams, like the system/IT team or DevOps teams, to improve the flow of information between security and other supporting teams. As we saw this “relationship” of AI=>Sec=>IT, for example, grow, we started planning how to provide this feedback directly to other teams within the organization.

Conclusion

There are loads of companies out there that have gone in full-steam-ahead (or at least, say they have) when it comes to AI and its capabilities. Our view is that it’s promising and is quite possibly the future of better Cybersecurity. We are looking forward to using it to yield faster, more accurate, and simply MORE ways to remediate. At the same time, it demands to be used responsibly and with a full view of the potential pitfalls. As the cybersecurity landscape evolves, private AI vendors and open-source initiatives like NanoGPT will be crucial in empowering companies to embrace AI-driven defenses without compromising data privacy and security.