XM Cyber is constantly striving to improve its products and help our clients better protect their most critical assets. As part of this mission, we needed to increase scalability. Ultimately, by migrating to Big Data and Stream Processing, we were able to realize this objective, overcome some challenges — and learn some useful information that we will share with you.

First, Some Background Information

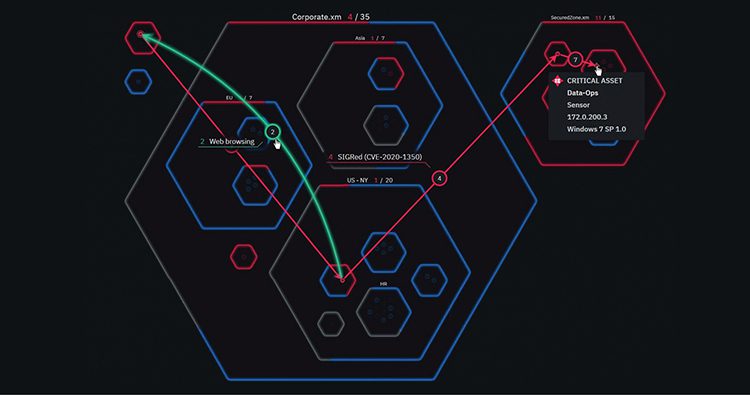

XM Cyber’s breach and attack simulation software helps identify vulnerabilities in our clients’ security environments. Our clients are given OS specific agents (Mac, Linux, or Windows) that will be installed on endpoint networks. These agents will collect metadata including OS information, network and registry configuration, etc. That’s information that will be used to help formulate simulated cyber attacks.

Here’s an example of how this operation works, a simple use case:

If the metadata collected from an agent indicates that an old OS version is running on this endpoint, a version that has a publicly available exploit — we simulate an attack based on this metadata.

We conclude that an attacker could have used this weakness to penetrate and move laterally through the network. An attacker who has penetrated the network will be able to move from endpoint to endpoint, compromising vulnerable endpoints as he goes along until he reaches the crown jewel assets and exfiltrates them.

Before: Our Previous System

In our prior architecture, we used a server with multiple Node.js processes. Each Node.js process contained a group of attack models, which gathered data from agents and processed them into simulated attacks. These processes and individual attack modules communicated with each other using RabbitMQ or MongoDB and used in-house implemented caching systems. By doing so, two different attack modules could interact with each other and share the information received from agents, which is more efficient than asking each agent individually for the same metadata. The number of Node.js processors was fairly fixed, as was the number of attack models. Splitting an attack model to multiple processors presented a difficult challenge and writing and maintaining cache systems took too much of our time.

To increase scalability, we needed a new approach. To help illuminate the path we choose to take, let’s view things through the prism of a fairly simple attack implementation.

Designing an Attack

Our use case will be – RDP attack simulation. RDP allows users to connect to a Windows computer remotely but it is often used maliciously. Typical RDP-based attacks include Man in the Middle and brute-forcing sign-in credentials.

In our case, let’s consider another common RDP tactic: Session Hijacking. This attack has a simple premise: The attacker compromises a computer and waits for a user to open a remote connection. Once this is done the attacker uses this connection to compromise the second (remote) computer.

In the context of XM Cyber, a simulated Session Hijacking attack will be implemented as follows: First, we collect all RDP sessions metadata made from all computers. Then, we match sessions based on their RDP session ID. Thus we will have metadata of all successful sessions made in the network grouped by their session ID. This data will be used later on to identify which sessions could be potentially compromised and which are safe.

How can we execute this simple piece of logic? There are two main options:

We can collect and match sessions in memory, which has relatively high throughput. However, there is also a drawback to this approach – in use cases with high numbers of messages (such as matching TCP/IP packets), there simply won’t be enough memory. Push things too far and you’ll have a serious problem on your hands.

Another implementation option is to query the database for matches. Throughput will be lower, but it will be possible to handle much more stress relative to using a memory-based implementation. However, this process still requires sharding and some time-consuming configurations.

Ultimately, both implementations place significant constraints on scalability and create time-consuming inefficiencies.

After: A Better Solution

Big Data provides another way to approach this task. XM Cyber’s agents create an enormous amount of data and metadata – and obviously, Big Data systems are excellent at handling massive amounts of information. A Big Data system can typically scale according to queries and questions. In the example of our Session Hijacking attack, if we want to match RDP requests by their session ID, a Big Data solution will scale according to the session ID making it very efficient while not asking us to implement anything scale-related. Big Data systems also feature powerful built-in tools such as joining, allowing you to fuse two pieces of information together, windowing, interval joining, and more, thus reducing the actual development time even more.

Batch vs. Stream

When using a Big Data system, there are two approaches for processing information: Batch and stream.

Batch is fast, efficient, easy to learn, and a great choice when you’re dealing with large datasets or running complex algorithms that require access to the full batch. If, for example, you want to store an entire dataset, this is a smart approach. However, it’s important to note that batch processing is processing after the fact, which precludes real-time results.

Stream processing handles unbound datasets and — rather than performing calculations on a batch of records — it entails working with a central dataset that receives continuous updates and processes new records as they arrive. Open streams will feed the dataset continuously and each stream will provide a different type of records.

If you’re looking for sub-second latency and real-time data — or if your system must make split-second decisions, detect anomalies or seize opportunities when they happen — stream should be your choice. If slight delays aren’t as important and you’re dealing with large datasets (a periodically updated list, for example), the batch may be the superior option.

Why Stream Worked for XM Cyber

Batch and Stream are both suitable for our use case. Batch, while has many great qualities, had some issues that made us not choose to use it. First, we want to find potential attacks in real-time. Secondly, our data is constantly evolving, user behavior and network activity metadata continuously flow into our system. We want to make decisions based on the individual message received and check it against all the data we have already collected and calculated. With batch we calculate all the data at once and output a result, so to use Batch with our type of input we will need to recalculate the entire state from scratch every time a message is received — each time some user clicks on a link — instead of processing the latest message only.

We chose Apache Flink as the Stream Processing engine. Flink was built for stream and offers top-of-the-line performance.

Next, we’ll provide you with a bit of code.

Flink Code Example

Here’s an RDP attack example outlined above and implemented in Flink.

Start by creating an RDP session stream, which will stream all RDP sessions metadata from all agents:

Then filter the request stream based on whether it was a client or a server request (incoming or outgoing RDP session) resulting in two streams. This will split the main RDP stream into two separate streams, one for each type of sessions:

Next, we partition the streams based on session ID:

And use Flink’s built-in interval join operator to match client and server requests with the same session ID based on a 20-minute time window:

Note that this piece of code, as simple as it is, can scale to hundreds of servers with minimal change and effort.

Once we finished our first attack implementation, we connected Flink to a client and waited…

We were truly amazed. Our throughput was millions per second and we got first results in a heartbeat.

Everything scale-related worked beautifully. Just like magic.

Bumps In The Road

There were, however, a few bumps in the integration process (such as CPU usage and serialization issues), that required some work from our team to iron out. In our view, fixing these kinds of problems made more sense to us compared to the problems we faced with our previous monolith solution. With Flink, the infrastructure to handle large scale was built for us and we only needed to tweak and configure it to our specific needs instead of designing and building our own custom made big data machine.

The Bottom Line

Overall, we had great results using Big Data and Flink for our use case and we believe that there are many other use cases within XM Cyber that would benefit from this approach using Flink and other big data solutions.

If you’d like to hear about our experience with Big Data and Flink in greater detail, we encourage you to watch our full webinar.

Yaron Shani is Senior Cyber Security Researcher at XM Cyber

Sophia Morozov is Software Developer and Researcher at XM Cyber

Related Topics